- Future Tools

- Posts

- Is it a bubble? 🫧

Is it a bubble? 🫧

Plus: (Model) safety first

Welcome back! Exciting news: I’ve been nominated for this year’s UgenticAI awards in the AI Addict category (guilty as charged). It’s really cool to be recognized for testing AI tools, sharing my findings, and doing my best to stay on top of this wild industry. I’d really appreciate a vote if you’ve got a minute! Thanks for being part of this journey.

P.S. I’m keeping a close eye on any new Gemini announcements from the Made by Google 2025 event starting later today. Updates to come in Friday’s edition!

Anthropic gives Claude an “off switch” for abusive chats

Anthropic CEO Dario Amodei Photo-Illustration: WIRED Staff / Getty Images

Anthropic is rolling out a new safeguard in Claude Opus 4 and 4.1: the ability to end conversations in extreme cases of persistent abuse or harmful requests.

The move isn’t framed as protecting humans, but rather as protecting the models themselves. Anthropic said it observed a “pattern of apparent distress” when Claude was forced to respond to requests for things like child exploitation or large-scale violence instructions.

How it works: Claude will only end chats after multiple failed attempts at redirection or when a user explicitly asks it to. It won’t use this feature if a user is in immediate danger of harming themselves or others. Users can still start new conversations afterward.

Planning for the future: The safeguard is part of Anthropic’s new “model welfare” initiative—a just-in-case approach to whether AI systems could, someday, have moral standing. Even if the science isn’t settled, the company is experimenting with guardrails that reflect that possibility.

What’s next: This move by Anthropic offers an early glimpse into how AI developers may begin grappling with the ethics of protecting models, not just their users. Will other companies follow suit?

Sam Altman calls a bubble

OpenAI CEO Sam Altman told reporters last week that the AI market has entered bubble territory.

“When bubbles happen, smart people get overexcited about a kernel of truth…Are we in a phase where investors as a whole are overexcited about AI? My opinion is yes,” Altman said.

He’s not alone: Altman joins a growing chorus of skeptics, including Alibaba co-founder Joe Tsai, Bridgewater’s Ray Dalio, and Apollo’s chief economist Torsten Slok—who argued last month that today’s AI hype exceeds the dot-com bubble in scale.

Signals from the market: Despite the bubble talk, though, OpenAI is preparing a secondary stock sale that could value it at $500 billion, up from $300 billion just months ago. Investors are piling in, even as Altman admits OpenAI is not yet profitable and will need trillions of dollars for future data center buildouts.

What it means: The warnings highlight a split between fundamentals and speculation. AI infrastructure and demand may be real and growing, but frothy valuations and easy capital could leave weaker startups exposed when the tide turns.

So...what do you think? Are we in an AI bubble?Click one to sound off. |

Grammarly rolls out AI agents for students and educators

Grammarly is launching a suite of AI agents aimed at tackling common academic writing challenges, from detecting plagiarism to predicting reader reactions.

The details: The tools are built into its new AI-native writing surface, Grammarly Docs, and are available at no extra cost for Free and Pro users (though some advanced features are Pro-only).

For students: AI grader (predicts grades and gives tailored feedback), reader reactions agent, proofreader, paraphraser, and citation finder.

For educators: Plagiarism checker and AI detector, which scans text against academic databases and flags the likelihood of AI-generated content.

Big picture: Universities are grappling with how to integrate AI without encouraging plagiarism. Tools like these could give students structure and feedback while also equipping teachers with detection methods. The big open question, though? Will AI tools promote better writing habits or make it easier to game the system?

Code Faster, Ship Smarter with Korbit AI

Korbit’s AI-powered code review platform helps engineering teams catch bugs, boost code quality, and keep projects moving—all with instant, context-aware feedback on every pull request.

Korbit delivers:

Automated PR summaries so reviewers spend time fixing, not guessing

High signal reviews tailored to your codebase and standards

Actionable team insights on review velocity, compliance, and quality trends

Join hundreds of engineering leaders already using Korbit to unblock bottlenecks, enforce coding standards, and upskill their devs in real time.

Sketch-to-animation, right in your browser

FrameZero

FrameZero is a lightweight, no-signup animation canvas for sketching ideas and turning them into simple motion. Draw on a whiteboard, set timings on a full timeline, and add transitions, images, code with syntax highlighting, and hand-drawn icons.

How you can use it:

Storyboard a concept and animate it in minutes

Turn whiteboard notes into shareable motion explainers

Mock up UI flows with timed transitions

Export quick loops for social or presentations

Pricing: Free

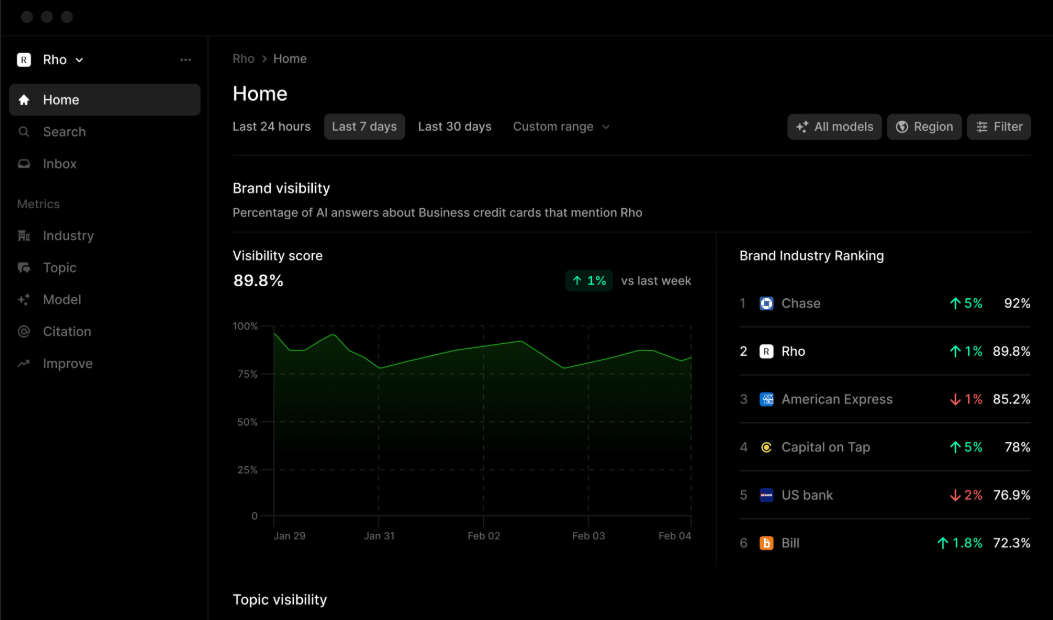

See (and build) your AI visibility

Profound

Profound tracks how AI assistants (ChatGPT, Gemini, Perplexity, Copilot, and more) describe your brand, surfacing the sources they cite, sentiment, and gaps versus competitors. It’s built for marketers who need to monitor “AI share-of-voice” and fix misrepresentations as search shifts to conversational answers.

How you can use it:

Audit brand mentions across major AI platforms in real time

Identify the sources influencing AI answers about your brand

Spot inconsistencies and fix messaging

Benchmark “AI SOV” against competitors and track lift over time

Pricing: Starts at $500/month

Jobs, announcements, and big ideas

Meta overhauls its AI org with new “Superintelligence Labs” led by Alexandr Wang.

Mustafa Suleyman calls for AI that serves humanity over mimicking the mind.

Meta rolls out AI dubbing and lip-syncing for Reels to boost creator reach.

OpenAI debuts ChatGPT Go in India with upgraded features at a budget price.

Microsoft brings Copilot AI to Excel, letting you write formulas in plain English.

NVIDIA unveils a lighter AI model for G-Assist and expands generative GPU tools.

Parallel, led by ex-Twitter CEO Parag Agrawal, secures $30M to build an AI-native internet.

GPT-5 hype and drama aside…the last week was packed with AI updates you actually need to know.

That’s a wrap! See you Friday.

—Matt (FutureTools.io)

P.S. This newsletter is 100% written by a human. Okay, maybe 96%.