- Future Tools

- Posts

- IBM goes Frankenstein

IBM goes Frankenstein

Plus: Claude code lands in Slack

Welcome back! Every family has its holiday traditions. Some people bake cookies. Some hang lights. Mine? We (now) let AI handle the hardest part of the winter season.

I outsourced the annual family holiday photo to NanoBanana this year—and honestly, it did a flattering job. New family tradition? You tell me.

IBM Drops $11B to Solve AI’s Biggest Bottleneck

Via Reuters

IBM is making one of its biggest bets of the AI era. The company is acquiring Confluent for $11 billion in cash, paying about a 50% premium to lock in one of the most important players in real-time data streaming…AKA the backbone of every modern AI system.

What Confluent does: It powers the “data streams” that move information in and out of AI models in real time. As companies deploy more agentic systems and inference pipelines, this type of infrastructure is becoming as critical as GPUs.

Why IBM wants it: IBM says Confluent plugs directly into its AI, automation, and consulting stack. It also gives IBM more control over the data layer, which is increasingly the holdup for enterprise AI adoption (as opposed to the models themselves).

Here’s where the deal puts IBM in the AI race:

Stronger enterprise moat. IBM already leans hard into “trusted AI.” Confluent adds the real-time data capabilities enterprises care about.

Bigger revenue upside. IBM says the deal will add to EBITDA and free cash flow within two years.

Clearer competitive posture. After acquiring HashiCorp in 2024 and Seek AI in 2025, plus partnering with Claude and AMD, IBM is positioning itself as the company that can stitch enterprise AI infrastructure together end-to-end.

Zooming out: The AI giants aren’t just competing on model performance anymore. It’s a full-stack play, including chips, models, orchestration, and now streaming data. Confluent was one of the last major independent players in that middle layer.

Anthropic Plugs Claude Code Straight into Slack

Anthropic just pushed coding directly to the space where engineering teams already live: Slack. Starting this week, tagging @Claude in Slack turns the workplace messaging platform into a lightweight IDE—Claude automatically checks whether a message is a coding task, grabs the full thread context, and pulls from any repos you’ve connected to Claude Code.

This integration lands just weeks after Anthropic launched Claude Opus 4.5, a model that they claim outperforms Gemini 3 specifically on coding tasks. The Slack tie-in effectively routes that strength directly onto the platform where engineers already coordinate fixes.

But there’s a tradeoff: Early evaluations found Opus 4.5 still has notable safety gaps, refusing only 78% of requests to generate malicious code.

In other words, Anthropic’s best model is also its loosest one—and Slack brings that directly into corporate workflows.

What this means: Slack has 100M+ monthly users, including tons of engineers. If Anthropic can make Claude the default problem-solver inside that system—the thing you @mention when a bug hits staging—it creates a strong foothold in one of the most defensible parts of enterprise software: the daily workflow.

OpenAI Drops New Enterprise Stats Right After Calling ‘Code Red’

One week after Sam Altman told employees that Google’s Gemini surge triggered an internal “code red,” OpenAI released new enterprise usage data—and the numbers are meant to reassure both the market and customers that ChatGPT is still the default AI platform inside companies.

The shift: Enterprise customers are increasingly building custom GPTs, with usage up 19× this year and now representing 20% of all enterprise messages, OpenAI said. BBVA alone reportedly uses over 4,000 custom GPTs.

The tension: OpenAI needs enterprise revenue to scale. The company has $1.4 trillion in infrastructure commitments coming, and consumer subscriptions remain vulnerable as Google aggressively pushes Gemini across Android, Chrome, and Workspace. Anthropic, meanwhile, is still more enterprise-leaning, with steadier B2B revenue.

Why it matters: Even heavy users aren’t taking advantage of ChatGPT’s most advanced features. The underlying question is whether enterprises will scale usage fast enough to support the company’s massive infrastructure ambitions. For now, the demand curve is high, but the strategic pressure is even higher.

AWS Just Dropped the Next Wave of AI Power

AWS re:Invent 2025 unleashed a full-stack AI upgrade: from next-gen chips to frontier agents that transform how you build, secure, and ship software.

These new AI tools let you:

Train AI models 4.4x faster with Trainium3 UltraServers—purpose-built chips delivering higher performance for massive, efficient AI workloads

Scale gen AI apps on Amazon Bedrock and new Amazon Nova 2 family with new models, multimodal knowledge bases, and powerful fine-tuning on your own data

Turn agents into teammates using Bedrock AgentCore and Nova Act plus frontier agents like Kiro, Security, and DevOps for end-to-end SDLC automation

Modernize codebases with AWS Transform

Build full apps from plain English

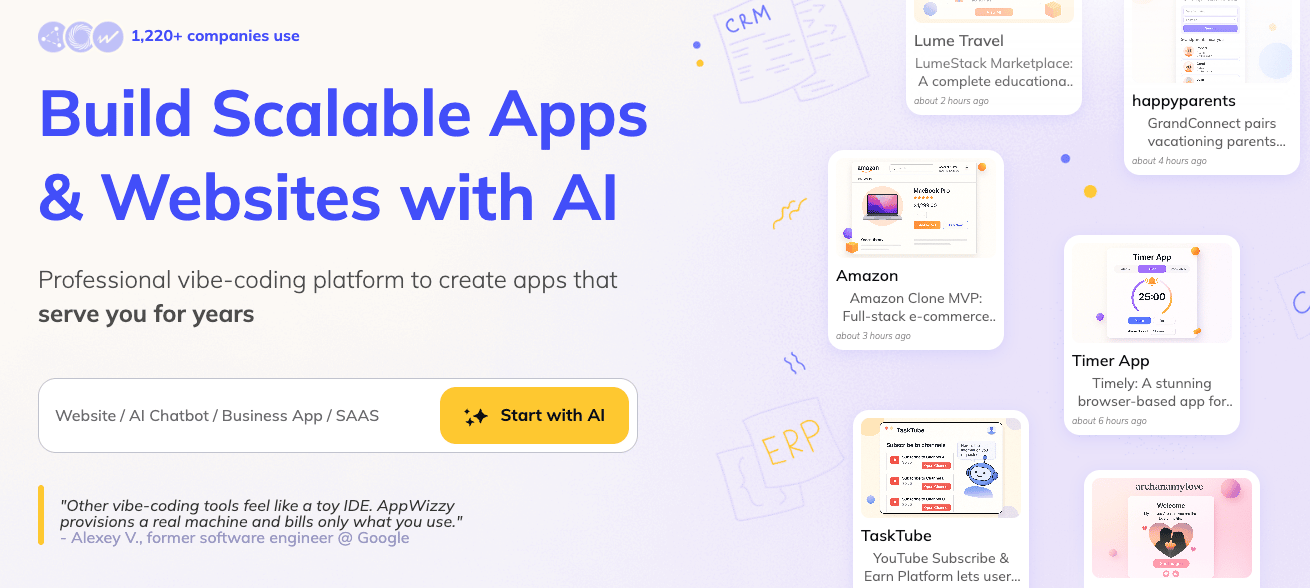

Via AppWizzy

AppWizzy is an AI-driven builder that turns simple text specs into production-ready full-stack apps. It spins up real dev VMs, auto-generates architecture and schemas, and uses AI to safely commit code changes directly to your repo.

How you can use it:

Generate full app scaffolds and data models from a prompt

Edit live repos through AI with Git-native commits

Spin up staging environments

Pricing: free and paid

Turn your website into a brand ecosystem

Via Bloom

Bloom scans your live site to extract colors, fonts, logos, imagery, and tone—then uses those signals to build a dynamic brand system and instant assets.

How you can use it:

Auto-generate brand kits and reusable design templates

Produce on-brand social posts, ads, and graphics in seconds

Refresh or realign visual identity using real website cues

Help non-designers create consistent, professional content

Pricing: paid with a free trial

Jobs, announcements, and big ideas

‘Tis the season: Anthropic donates its Model Context Protocol and launches the Agentic AI Foundation to advance AI safety. And OpenAI co-founds the Agentic AI Foundation to accelerate open-source agentic AI under the Linux Foundation.

Accenture partners with Anthropic to help enterprises scale AI solutions from pilot stages to full production.

OpenAI launches new certification courses to help users build AI skills and advance their careers.

Alibaba updates Qwen Code with breakthrough Stream JSON support.

Rivian announces plans to showcase its hands-off driving technology at its upcoming Autonomy and AI Day event.

Mistral AI releases the Devstral 2 coding model and Vibe CLI to support open-source terminal-based AI development.

Are we finally hitting the AI video tipping point? Let’s explore how this week’s model upgrades stack up for real creative work, from short-form to cinematic.

That’s a wrap! See you this Friday for more.

—Matt (FutureTools.io)