- Future Tools

- Posts

- Google’s flash ✨

Google’s flash ✨

Plus: GPT-5 dons a lab coat

Welcome back! At the start of 2025, Google looked cornered. Antitrust judges circling. AI startups eating its lunch. A White House openly hostile to Big Tech. Chrome-for-sale rumors that didn’t sound that far-fetched.

Fast forward 12 months and we see that Google has survived the courts, held its grip on search and ads, and proven it can adapt. Google might just be the tech turnaround story of the year. What do you think?

P.S. The Future Tools team is taking next week off to enjoy the holidays with our friends and families. We’ll be back in your inbox before the new year with a fun edition, then back to our regularly scheduled programming the first week of the year. Thank you so much for being part of this community in 2025! Excited for all that’s to come in 2026.

Google Makes Gemini 3 Flash the Default

Via Google

Speaking of Google…on Wednesday, it launched Gemini 3 Flash, a faster, cheaper model designed to be the new workhorse. It’s now the default inside the Gemini app and AI Mode in Search.

Here’s what’s new:

Stronger performance than the last Flash. Google says Gemini 3 Flash makes a big jump over Gemini 2.5 Flash and can even trade punches with frontier-tier models (including Gemini 3 Pro and GPT-5.2) on some evaluations.

More multimodal-native behavior. The model handles mixed inputs (video, sketches, audio) better than earlier models and returns more “visual” answers (think tables and images).

Pricing moved up slightly—but Google argues it nets out. It’s listed at $0.50 / 1M input tokens and $3.00 / 1M output tokens, up from 2.5 Flash. Still, Google claims it’s faster and uses fewer tokens than 2.5 Pro on “thinking” tasks, which could reduce total spend for certain workloads.

And that’s not all: Google is also threading “build with Gemini” deeper into the product. Its vibe-coding tool Opal is now integrated into Gemini on the web via the Gems manager, letting people create mini-apps from plain-English prompts. There’s also a visual step-based editor if you want to tweak the workflow without touching code.

The bigger picture: Model releases are starting to feel less like “new brains” and more like new defaults. Google is trying to position Gemini as the place where you search, build, and iterate before you even think to open anything else.

OpenAI and Amazon Consider a $10B+ Investment

OpenAI is reportedly in discussion with Amazon about a potential investment that could exceed $10 billion, alongside an agreement to use Amazon’s in-house AI chips.

What we know so far: The talks are still fluid, but the deal would likely pair capital with infrastructure. Amazon would deepen its role as a compute provider by supplying its Trainium and Inferentia chips, while OpenAI gains another hyperscale partner beyond Microsoft.

The timing matters: OpenAI finalized its for-profit restructuring in October, loosening Microsoft’s exclusivity and giving OpenAI more freedom to raise capital and partner across the ecosystem. OpenAI has already committed more than $1.4 trillion in long-term infrastructure deals, including a recent $38B capacity agreement with AWS.

Why it matters: This is what diversification looks like in the AI era. OpenAI is no longer tied to a single cloud or capital source, and Amazon is signaling that it doesn’t want all its AI chips riding on Anthropic alone.

GPT-5 Shows It Can Perform Novel Lab Work

GPT-5 has, for the first time, demonstrated the ability to carry out forms of novel laboratory work. The results suggest the model can go beyond analyzing papers or summarizing data to contributing directly to experimental workflows.

What it did: In controlled settings, GPT-5 was able to reason through experimental steps, generate hypotheses, and help design or interpret lab processes in ways that meaningfully supported human researchers.

The bigger picture: AI is edging closer to becoming an active collaborator in scientific discovery. If models like GPT-5 can handle parts of the scientific loop that traditionally require hands-on expertise, it could change how quickly breakthroughs move from idea to experiment.

Nebius Token Factory—Post-training

Nebius Token Factory just launched Post-training—the missing layer for teams building production-grade AI on open-source models.

You can now fine-tune frontier models like DeepSeek V3, GPT-OSS 20B & 120B, and Qwen3 Coder across multi-node GPU clusters with stability up to 131k context. Models become deeply adapted to your domain, your tone, your structure, your workflows.

Deployment is one click: dedicated endpoints, SLAs, and zero-retention privacy. And for launch, fine-tuning GPT-OSS 20B & 120B (Full FT + LoRA FT) is free until January 9. This is the shift from generic base models to custom production engines.

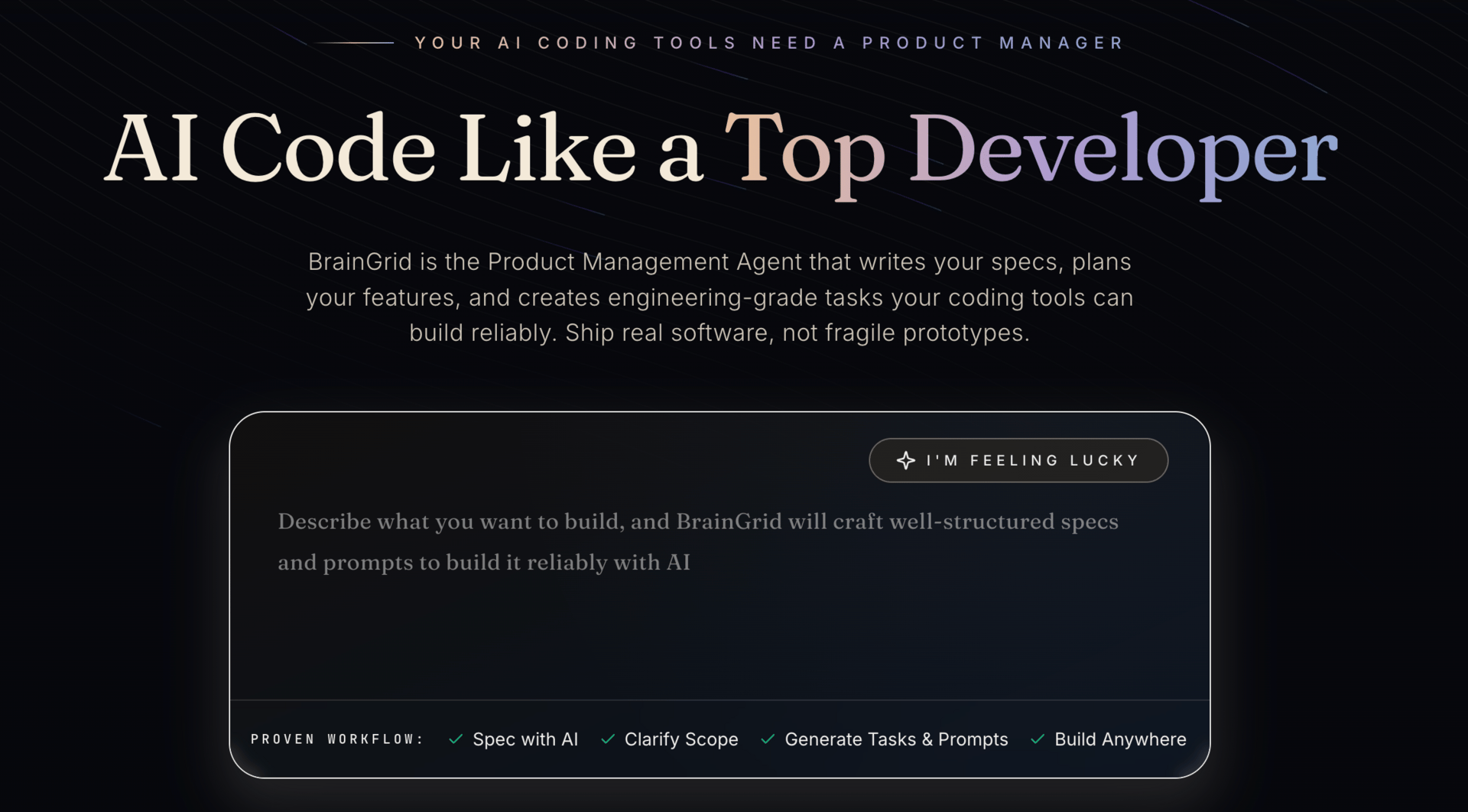

Turn ideas into engineering specs

Via BrainGrid

BrainGrid is an AI product management agent that converts plain-English product ideas into scoped tasks and production-ready prompts by analyzing your codebase.

How you can use it:

Translate feature ideas into clear, testable engineering specs

Generate acceptance tests and dependency mappings automatically

Review and sanity-check code generated by tools like Cursor or Claude Code

Keep AI outputs aligned with your existing repo and architecture

Pricing: Paid with a free trial

An AI workspace built for answers

Via Sup AI

Sup AI is a unified AI platform that automatically routes tasks across frontier models, combines their outputs, and surfaces answers with confidence scoring and citations.

How you can use it:

Ask complex questions and get cited, confidence-scored responses

Run research workflows across multiple models without manual switching

Store and query project-specific memory with secure team access

Balance cost and performance with automatic model selection

Pricing: Free and paid plans available

Jobs, announcements, and big ideas

Luma AI introduces a new Dream Machine model that generates videos using only start and end frames.

NVIDIA unveils the RTX PRO 5000 Blackwell GPU with 72GB of memory for advanced desktop agentic and generative AI workloads.

Google releases FunctionGemma, a specialized Gemma 3 model fine-tuned specifically for custom function calling.

Anthropic expands its AI vending machine experiment with the launch of phase two under Project Vend.

OpenAI updates ChatGPT model guidelines to introduce new teen safety measures under its U18 Principles.

The next wave of AI video is here. Here’s what changes with Runway 4.5 and Kling AI, and what creators can realistically make today.

That’s a wrap! Happy holidays—hope you and your loved ones enjoy a safe and fun holiday season. See you soon!

—Matt (FutureTools.io)